In the ever-evolving landscape of machine learning, staying at the forefront of innovation is paramount. Today, we are thrilled to announce a significant expansion of Kolena; building on our commitment to empowering data scientists and ML engineers, we are excited to introduce support for tabular and audio data (and continued support of computer vision and NLP data), opening up new horizons for testing and debugging machine learning models.

Kolena was born from a project our co-founders, Mohamed Elgendy, Andrew Shi and Gordon Hart had worked on at a previous company. A machine learning model with 99% accuracy in a customer demo was presented, only to be met with an embarrassing missed detection of an obvious object.

The fallout was irreparable due to lost trust with the customer, and therefore, business. At the same time, though, the experience opened their eyes to a glaring pain point in the way AI / ML models are developed and selected for production.

After additional research, it was learned that another version of our model with 96.3% accuracy actually performed better at critical subclasses (including the scenario in which the other model failed). Had they presented the 96.3% model, that customer trust would not have been broken. They realized then that traditional model testing frameworks don’t provide the level of granularity that the industry desperately needs to validate the performance of AI / ML products with confidence.

Kolena’s goal then and now is to bridge the gap in ML tooling and solve one of AI’s biggest problems: the lack of trust in model effectiveness. The use cases for AI are enormous, but AI lacks trust from both builders and the public. It is our responsibility to build that trust with full transparency and explainability of ML model performance, not just from a high-level aggregate ‘accuracy’ number, but from rigorous testing and evaluation at scenario levels.

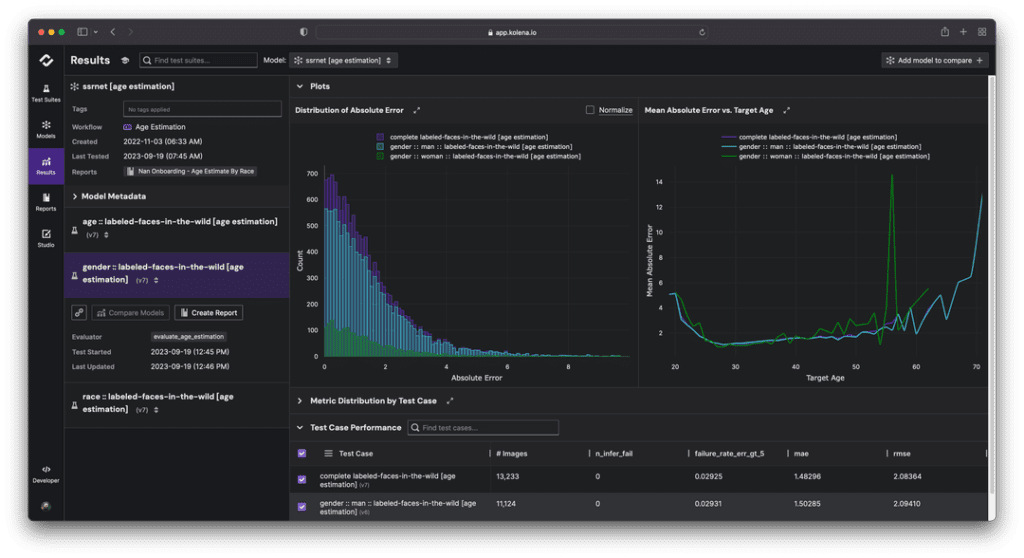

With Kolena, machine learning engineers and data scientists can uncover hidden machine learning model behaviors, easily identify gaps in the test data coverage, and truly learn where and why a model is underperforming, all in minutes not weeks. Kolena’s AI / ML model testing and validation solution helps developers build safe, reliable, and fair systems by allowing companies to instantly stitch together razor-sharp test cases from their data sets, enabling them to scrutinize AI/ML models in the precise scenarios those models will be unleashed upon the real world. Kolena platform transforms the current nature of AI development from experimental into an engineering discipline that can be trusted and automated.

We are dedicated to pushing the boundaries of what’s possible in machine learning testing and debugging. With the inclusion of tabular and audio data support, we are enabling data scientists and engineers to tackle an even broader range of challenges with confidence and precision.

We invite you to explore the expanded capabilities of Kolena and experience firsthand how our platform can accelerate your AI / ML projects across diverse data types. As always, we are committed to your success, and we look forward to the innovative solutions that this expansion will unlock.

Stay tuned for more exciting updates from Kolena as we continue our journey to empower the future of AI and machine learning.