What Are Generative Models in Machine Learning?

Generative models are a class of statistical models mainly used in unsupervised learning, a type of machine learning (ML). These models are capable of generating new data instances that resemble your training data.

Generative models learn the data distribution of an input training set, with the goal of generating new data points that resemble the initial training set. This means that these models are capable of understanding and replicating the nuances of your data. Generative models have found broad application, ranging from image generation to natural language understanding and synthesis, and are the basis of the latest generation of AI systems powered by large language models (LLMs).

In the larger context of machine learning, generative models are often contrasted with discriminative models. While discriminative models aim at mapping the input data to appropriate labels, generative models focus on understanding the underlying data distribution.

This is part of an extensive series of guides on AI technology.

How Generative Modeling Works

Generative models work by capturing the joint probability distribution between the observed variables (actual data point) and latent variables (possible or likely data points). This joint distribution is then used to generate new data. The primary focus of these models is to understand the underlying structure and distribution of the data.

The process begins with the model being fed a set of training data. The model then learns the probability distribution of this data. Once trained, the model can generate new data instances by sampling from this learned distribution. Generative models can fill in missing data or imagine new data instances not seen during training.

The implementation of generative models varies depending on the complexity of your data and the specific task at hand. The choice of the generative model architecture helps determine the quality of the generated data.

4 Types of Generative Model Architectures

1. Bayesian Networks

Bayesian networks are the simplest type of generative model. They represent a set of random variables and their conditional dependencies, represented by a directed acyclic graph (a mathematical construct that represents a series of activities with a flow from one activity to another). Each node in the graph represents a random variable, while the edges (arrows) between nodes represent probabilistic dependencies among the corresponding variables.

Bayesian networks can handle uncertainty and reason probabilistically about data. They are widely used in various fields such as medical diagnosis, image processing, information retrieval, and bioinformatics.

2. Generative Adversarial Networks (GANs)

GANs consist of two neural networks, the generator and the discriminator, that compete with each other in a zero-sum game framework. The generator tries to produce data that the discriminator cannot distinguish from the real data, while the discriminator tries to identify the fake data created by the generator.

Since their introduction, GANs have been used in a wide range of applications, including image synthesis, semantic image editing, style transfer, and more. State of the art GAN models can generate realistic images that are indistinguishable from real images.

3. Variational AutoEncoders (VAEs)

VAEs are another type of generative model that are widely used for their ability to perform both inference and generation. VAEs are based on neural networks, but unlike GANs, they have a well-defined loss function that can be optimized directly.

VAEs are particularly suited to generating specific, new data instances. Some of their applications include generating human faces, reconstructing missing parts of images, and creating digital art.

4. Autoregressive Models

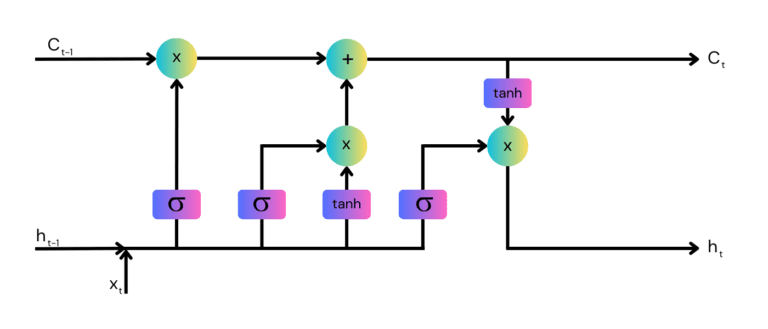

Autoregressive models are a class of generative models that generate sequences based on the assumption that each element in the sequence depends on the previous elements. These models have been successful in a variety of tasks, such as text generation, time series forecasting, and speech synthesis. Recently, autoregressive models have expanded into the field of image analysis and generation.

Probably the most important model in the autoregressive family is the Transformer, initially proposed in the paper “Attention is All You Need” (Vaswani et, al. 2017). The Transformer architecture is the basis for large language models (LLMs) that gained wide usage and publicity in recent years, including Google BERT and OpenAI GPT.

Autoregressive models also have their limitations. They require massive amounts of data to train, and their inference process can be very computationally intensive.

Learn more in our detailed guide to generative AI architecture (coming soon)

What Is Deep Generative Modeling?

Deep generative modeling is a subset of generative models that utilizes deep learning techniques to generate data. Deep learning is a type of machine learning that uses artificial neural networks with several layers (hence the ‘deep’ in deep learning) to model and understand complex patterns in data.

Deep generative models can generate higher-quality and more complex data compared to traditional generative models. They can learn to generate data by training on a large amount of data and capturing the underlying data distribution.

Examples of deep generative models include generative adversarial networks (GANs), variational autoencoders (VAEs), and autoregressive models, including the Transformer architecture. These models have shown impressive results in tasks such as image synthesis, text generation, and anomaly detection.

Generative Models and Similar Concepts

Generative Modeling vs. Discriminative Modeling

Generative and discriminative models represent two fundamentally different approaches to machine learning. While generative models aim to model the distribution of individual classes, discriminative models focus on modeling the decision boundary between the classes.

A generative model, when given a new data instance, asks: “Which class is most likely to have generated this instance?”, while a discriminative model asks: “Which class does this instance belong to?”. The generative approach is often more flexible and can generate new data instances, but it is also more computationally intensive than the discriminative approach.

Learn more in our detailed guide to discriminative vs generative models (coming soon)

Generative AI vs. Predictive AI

Predictive AI is used for predicting future outcomes based on historical data. It’s often used in forecasting, trend analysis, and other predictive analytics tasks.

Generative AI, which includes generative models, is more concerned with creating new data instances that resemble the training data. The focus here is not on predicting the future, but on understanding and replicating the patterns in the data. These two types of AI can complement each other in many applications.

Learn more in our detailed guide to generative AI vs predictive AI

Generative AI vs. Conversational AI

Conversational AI involves the use of messaging apps, speech-based assistants, and chatbots to automate communication and create personalized customer experiences. It’s a major application of natural language understanding.

Generative AI is a broader concept that includes not only natural language generation but also image and music generation, among other applications. While conversational AI can benefit from the advancements in generative AI, they are not the same. Generative AI can be seen as a tool that enables more sophisticated conversational AI systems.

Learn more in our detailed guide to generative AI vs conversational AI (coming soon)

Generative AI vs. Large Language Models (LLMs)

Large Language Models (LLMs), like OpenAI’s GPT series or Google’s BERT, are specialized types of generative models focused on text data. They are trained on vast datasets consisting of text from the internet, books, and other sources. Their primary function is to generate human-like text based on natural language prompts.

LLMs are a subset or specific application area of generative AI, focusing exclusively on natural language understanding and generation. They are often used in tasks that require a deep understanding of human language, such as translation, summarization, and question-answering.

LLMs are generally autoregressive models, which means they generate sequences (like text) one element at a time, based on the previously generated elements. This characteristic makes them less efficient for parallelized computation compared to other types of generative models like GANs, which can generate whole data instances in one operation.

Applications and Examples of Generative Models

Image Generation

Generative models are capable of generating realistic images of faces, animals, and imaginary landscapes. GANs, in particular, have been successful in producing high-quality images that are often indistinguishable from real photos. Language models are also increasingly being used to generate realistic images from textual prompts.

Generative models also allow for the manipulation of images in unique ways, such as changing the style of an image to resemble a particular artist’s work, transforming a sketch into a photorealistic image, and “inpainting”, which involves seamlessly replacing elements in an image with other elements.

Examples of image generation models: OpenAI DALL-E, Stable Diffusion, Midjourney

Text Generation

Generative models can generate human-like text that can be used for chatbots, story generation, and even poetry. Autoregressive models, in particular those based on the Transformer architecture, have demonstrated impressive results in this area.

Text generation models are potentially useful for many industries, from customer service to entertainment to content writing. However, they also raise important ethical questions about the use and potential misuse of this technology.

Examples of text generation models: OpenAI GPT-4, Google PaLM, Meta LLaMA

Data Augmentation

In many machine learning projects, the amount of data available for training models is limited. Generative models can generate new training examples, augmenting the existing data. This can improve the performance of machine learning models, especially in cases where data is scarce or imbalanced.

Examples of data augmentation models: In principle, any generative model that can generate data in the required format can be used to augment datasets using that format. Practically, GANs and VAEs are commonly used for augmentation because they are computationally inexpensive.

Anomaly Detection

Generative models can learn the normal behavior of a system from the training data and can then identify anomalies, which are instances that deviate from this normal behavior. This can be particularly useful in sectors like cybersecurity, where detecting unusual activities can prevent potential threats.

Examples of anomaly detection algorithms: GANomaly, AnoGAN

Simulation and Forecasting

Generative models can simulate complex systems and predict future behavior, making them highly valuable in forecasting. For instance, in weather prediction, these models can simulate weather patterns and predict future conditions. Similarly, in finance, generative models can simulate market behavior and forecast future trends.

Example of a generative simulation model: FIN-GAN

What Are the Limitations of Generative Models?

Despite the numerous benefits of generative models, they are not without their limitations. Here is a look at some of the main limitations.

Training Complexity

Generative models often require a large amount of data and substantial computational resources to train effectively. This can make them inaccessible for small-scale projects or organizations with limited resources.

The training process can be time-consuming and require a high level of expertise to manage. The complexity of the training process can also lead to overfitting, where the model learns the training data too well and performs poorly on new, unseen data.

Ethical Concerns

The use of generative models also raises several ethical concerns. The ability of these models to generate realistic data can be exploited for malicious purposes. For example, they have been used to create deepfakes, which are manipulated images or videos that can be used to spread misinformation or commit fraud.

The use of generative models for personalization can also lead to privacy concerns. While personalized content can enhance user experience, it requires access to personal data. Ensuring that this data is handled responsibly and securely is important.

Quality Control

Another limitation of generative models is the difficulty in controlling the quality of the generated data. Generative models can generate a wide variety of data, but ensuring that this data is of high quality and useful can be challenging.

For instance, in the case of text generation, a model may generate grammatically correct sentences that lack meaningful content or context. Similarly, in image generation, a model may produce images that are visually appealing but lack realism or relevance to the task at hand.

AI Testing & Validation with Kolena

Kolena is an AI/ML testing & validation platform that solves one of AI’s biggest problems: the lack of trust in model effectiveness. The use cases for AI are enormous, but AI lacks trust from both builders and the public. It is our responsibility to build that trust with full transparency and explainability of ML model performance, not just from a high-level aggregate ‘accuracy’ number, but from rigorous testing and evaluation at scenario levels.

With Kolena, machine learning engineers and data scientists can uncover hidden machine learning model behaviors, easily identify gaps in the test data coverage, and truly learn where and why a model is underperforming, all in minutes not weeks. Kolena’s AI / ML model testing and validation solution helps developers build safe, reliable, and fair systems by allowing companies to instantly stitch together razor-sharp test cases from their data sets, enabling them to scrutinize AI/ML models in the precise scenarios those models will be unleashed upon the real world. Kolena platform transforms the current nature of AI development from experimental into an engineering discipline that can be trusted and automated.

See Additional Guides on Key AI Technology Topics

Together with our content partners, we have authored in-depth guides on several other topics that can also be useful as you explore the world of AI technology.

Databricks Optimization

Authored by Intel Tiber

- Databricks Pricing Model Explained: 2024 Cost Guide

- AWS Databricks: Features, Pricing, and How to Get Started

- Azure Databricks: Spark on Steroids in the Azure Cloud

Model Training

Authored by Kolena

- Model Training in AI/ML: Process, Challenges, and Best Practices

- Concept Drift Clarified: Examples, Detection & Mitigation

- What Is Model Drift and What You Can Do About It

Customer Success

Authored by Staircase