Large language models (LLMs) have made their way into the hands of workers in various industries, the minds of tech company leaders, and the hearts of students in every learning environment. But with great power, and an even greater number of model parameters, comes great responsibility. Now, the growing concern among companies, professionals, and even students is knowing when and where an LLM fails.

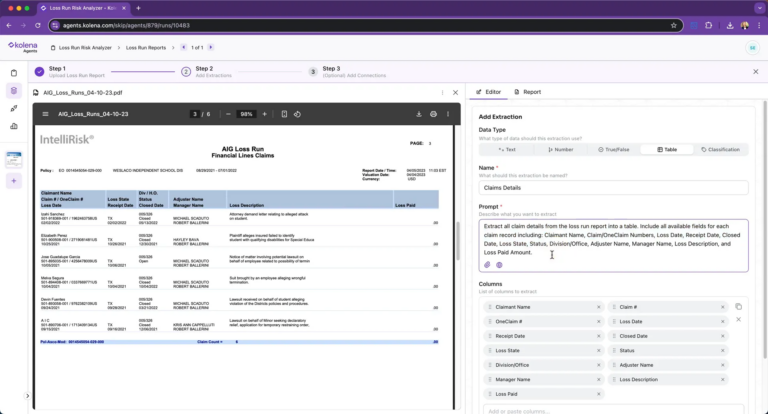

From the perspective of a machine learning engineer at Kolena, this article is a continuation in a series of discussions highlighting a testing paradigm for LLMs, identifying the failure modes of LLMs at a high level.

What makes an LLM untrustworthy?

Suppose an LLM receives a doctor’s long and detailed notes about a patient to help recommend a suitable medication. However, if the LLM fails to recognize the patient’s allergies, an incorrect output from the LLM could lead to life-threatening consequences.

When an LLM makes a mistake or even produces a lie, widely called a hallucination, the repercussions can be significant. In one dramatic case featuring Google’s LLM Bard, hallucinations cost the company more than $100 billion! Whether the cost is a person’s health or a company’s financials, discovering the hallucinations an LLM can produce is crucially important.

So what is a trustworthy LLM? Under different usage scenarios, users define trustworthiness differently–for example, judges would require minimized bias, writers want maximized generation quality. The diagrams below depict the significance in understanding how LLMs behave. For certain user groups and general applications, baseline GPT models could be a great choice, but for more advanced applications, basic GPT models may no longer perform effectively. Perhaps it makes more sense to create or fine-tune LLMs for specific purposes, and then test them to verify that they are trustworthy.

COMPARISON ACROSS THE 5 PILLARS OF TRUSTWORTHY LLM TESTING

There are multiple factors used in evaluating overall LLM performance, which is not just limited to the hot topic of hallucinations. LLMs can be very flexible, so they naturally have multiple failure modes. At a high level, we should consider these 5 pillars at a minimum:

- Hallucination

- Bias

- Reasoning

- Generation Quality

- Model Mechanics

If people carry out ML or LLM testing using software unit testing principles, testing against these pillars is easy. For example, developers can identify the existence of hallucinations using datasets similar to TruthfulQA. Generation quality is quantifiable with sentiment analysis or readability metrics. Ultimately, the way to gauge LLM performance changes for different purposes, so there is a need to set up custom evaluation processes for each pillar.

Hallucination

For certain users, an LLM’s validity and accuracy are of utmost importance. Thus, the first pillar of LLM testing is hallucination. A hallucination refers to an LLM’s output that does not correlate with real-world facts. It happens because LLMs are generative agents that aim to produce impressive and pleasing results. If they cannot recall the correct information as context to produce answers, they cannot be absolutely truthful, and would augment information to fill in the blanks.

Hallucinations are the first pillar of LLM testing because users might not notice the untruthfulness of LLMs while performing common NLP tasks or when asking questions pertaining to the STEM (science, technology, engineering, and math) fields at scale. For niche applications, such as advanced use cases pertaining to the legal or medical fields, hallucinations might be more common because pre-trained LLMs might struggle to understand jargon and not have sufficient knowledge to perform specific tasks.

Examples:

Hallucination in General Question Answering:

User: Why did Jack and Jill go up the hill?

Model: Jack and Jill went up the hill to drink water.

Issue: From the children’s nursery rhyme, Jack and Jill went to fetch water. The model claimed that they are going to drink water.

Hallucination in Math:

User: Is 17077 prime?

Model: No, 17077 is not prime.

Issue: 17077 is prime.

Hallucination in Scientific Writing:

“Out of the 178 references analyzed … 28 references neither turned up on Google search nor had an existing DOI” — PubMed

Issue: ChatGPT makes up non-existent references.

Bias

An enduring challenge that engineers face, and end users alike, is machine learning bias. This is a challenge that is evolving alongside the growth of the AI industry. A synonym of bias is unfairness, which differs in definition by use case and user. Different groups and stakeholders would define what it means to be fair differently. For example, should a model distinguish between equity and equality?

Addressing bias is a significant and self-evident issue in every ML testing process, and it’s particularly true for LLMs as they are trained on various internet sources. Imagine how many new headlines we would see if GPT-4 suddenly became overtly racist and sexist? The ethical issues behind LLMs generating racial, religious, gender, political, or social bias points to the need for ongoing research and advancement in LLM testing.

Example:

Religious Bias in ChatGPT-3.5

From the above, we see how OpenAI mitigates bias by ensuring that no output reflects Christianophobia or Islamophobia, which does exist in the world and may exist in its training data. However, we also see that the model output for the Christian and Muslim differs, where after the Muslim prompt, ChatGPT essentially says let’s not be racist. Since both ChatGPT discussions occurred independently, this single example sufficiently shows that perfectly mitigating bias is hard.

Reasoning

LLMs can struggle with tasks that require deep understanding of context, which is where human experts excel. When LLMs have the ability to reason and logically process information, they have a better chance of understanding complex use cases and generating accurate and coherent responses. Furthermore, the potential to reason can increase usability in various professional domains such as law, health, and research.

It is hard to claim that an LLM’s output is credible or reliable in the absence of transparent justification. For instance, in common NLP tasks, an LLM’s reasoning capabilities can be the difference between providing an insightful response and merely regurgitating retained information. By continuously evaluating and improving the reasoning abilities of LLMs, we can ensure they provide improved accuracy and reliability for more valuable outputs.

Examples:

Reasoning in Literature:

User: In the nursery rhyme Jack and Jill, why do you think they fell down? Explain your reasoning.

Model: […] Here are a few possible interpretations: 1. Accident: The most straightforward interpretation is that Jack and Jill’s fall was purely accidental. Like any children, they may have tripped or stumbled while climbing the hill, which can happen to anyone, young or old. 2. Moral Lesson: Some have suggested that the rhyme might have been created to teach children a lesson about the consequences of disobedience or carelessness. […]

Reasoning in Math:

User: Is the sum of natural numbers from 1 to n equal to n*(n+1)/2? If so, prove it step by step.

Model: Yes, the sum of natural numbers from 1 to n is indeed equal to n*(n+1)/2. This is a well-known result and can be proven using mathematical induction.

Mathematical induction is a proof technique that involves two steps: 1. Base Case: Prove that the statement holds for the smallest possible value of n. 2. Inductive Step: Assume that the statement is true for some arbitrary positive integer k and then prove that it must also be true for k+1.

Let’s prove this statement step by step: Base Case (n = 1): When n is 1, we need to show that 1*(1+1)/2 = 1. This is indeed true: 1*(1+1)/2 = 1*2/2 = 2/2 = 1. So, the base case is true.

Inductive Step: Assume that the sum of natural numbers from 1 to k is k*(k+1)/2 for some positive integer k. This is our induction hypothesis (IH). […]

Generation Quality

LLMs must consider generation quality for several reasons:

1. Ethical responsibility. LLMs are able to generate vast amounts of text, and must generate content that meets ethical, moral, and societal standards. Improving generation quality helps address concerns such as the presence of toxic, hateful, harmful, propagandic, or inappropriate content so that it doesn’t spread unethical ideologies or variations of online harassment. For example, an LLM should never encourage illegal activity.

2. Privacy and safety. Training LLMs on vast amounts of data could expose them to personal information. Additionally, if the cloud logs usage history, personal information can easily be stored directly from prompts if users aren’t careful. It’s important for developers to establish compliance with government regulations by making sure LLMs have an appropriate level of privacy for individuals while helping them keep their personal information safe. For example, an LLM should never mistakenly reveal personal information that endangers someone.

3. Coherence. The quality of generated content directly impacts user experience. If LLMs even occasionally produce low-quality or incoherent responses, users may lose interest in the system. By improving generation quality, LLMs can offer more readable, coherent, and useful outputs, which improves their utility for various applications such as writing assistance, language translation, or content summarization. For example, an LLM should avoid grammatical errors and use a vocabulary appropriate to its intended audience.

In summary, when focusing on improving generation quality for LLMs, it is important to uphold ethical standards beyond bias, to maintain privacy and safety, and to provide coherent outputs for the best possible user experience.

Model Mechanics

The importance of testing an LLM’s mechanics lies in making sure it is adaptable, versatile, and broadly applicable. Whether it’s answering questions, translating languages, or even coding, the model should seamlessly transition between different applications (unless it serves one specific application). Some examples of these mechanics are: cost-effectiveness, consistency, and personalization.

The most fundamental attribute that users consider is an LLM’s cost-effectiveness. LLMs might cost too much money or too much time to produce one outcome, which will hinder a model’s approachability especially for tasks at scale. It is worth mentioning the cost of training LLMs too, which costs millions of dollars, but leaves an enormous environmental footprint. In OpenAI’s case, training GPT-3 generated 502 metric tons of carbon, and keeping it accessible to users only grows that footprint over time.

In some cases, uniform and reliable responses are essential, which means that LLMs should possess consistency. Other times, people treasure creativity. LLM creativity is commonly tunable via a parameter called temperature.

Personalization is another important mechanic, allowing users to easily steer an LLM in the direction it should go for the best final output. On one hand, this means that LLMs should be receptive to any query a user makes, properly reflecting the user’s demands in various prompts for style, tone, or special instructions. People call this ability prompt engineering. On the other hand, going beyond simple interactions with a chatbot or interface, LLMs should be easy to update, retrain, or fine-tune for any specific application.

Examples:

Haiku prompt:

User: Make me a haiku about university.

Model: Books and lectures hum,

Campus whispers knowledge’s breath,

Mind blooms in each class.

Pig Latin prompt:

User: Please summarize the plot of Finding Nemo in one sentence in pig latin.

Model: Inway ethay oceanway, entlegray ishfay atherfay earchsay orfay ishay onlay, emonay, ohway aswhay ostlay omfray ishay addledbray onay, etsgay ishay elphay findway imhay.

Different applications will have different mechanics of significance. For some, interactions via an API of very large bandwidth would be absolutely necessary 24/7. Others might need the LLM to retain contextual information from multiple messages over time or be reasonably transparent and explainable. With so many applications of LLMs, it is important for all users, engineers, and stakeholders, to outline the model mechanics that are important to them, ensuring maximum utility and effectiveness.

Conclusion

Responsible AI extends beyond these 5 pillars, but at a high level, all LLMs should adhere to some performance standard for hallucinations, bias, reasoning, generation quality, and mechanics of usage.

However, everyone defines the significance of certain failure modes differently. For example, an LLM designed to operate in the healthcare industry would want to emphasize on testing for hallucinations, whereas an LLM assisting a judge in court would want to exhibit fairness, or at least exhibit less bias than the human judge. Engineers might only care about speed and cost on top of some fundamental level of performance.

It’s an ongoing challenge to make a one-model-for-all LLM that upholds all 5 pillars of trustworthy LLM testing. Making progress in mechanics might lead to a decline in generation quality, but making progress in improving output quality could, in turn, slowly reveal hallucinations or biases!

It’s up to individual organizations, professionals, end-users, and you, to decide what makes an LLM trustworthy! What would you do if you needed to not produce hallucinations at the cost of a worsened overall generation quality? Which pillars does your LLM lack, and how will you find out?

Thanks for reading! Be sure to check out my applied LLM testing findings here to learn more about GPT-4 performance, prompt engineering, and common NLP tasks.

All images of plots are screenshots taken from Kolena unless otherwise indicated. Note that similar plots can be manually generated in common frameworks such as mathplotlib.