What Is Explainable AI?

Explainable AI, also known as XAI, is a branch of AI that focuses on creating systems that can provide clear, understandable explanations for their actions. With the escalating complexity of AI algorithms, it has become increasingly difficult to understand and interpret their decision-making processes. This lack of transparency can lead to distrust and misalignment, hindering the adoption of AI technologies.

XAI aims to bridge this gap by offering detailed insights into the working of AI systems, making them more transparent and trustworthy. In addition to making AI understandable to humans, it enables AI systems to explain their decisions in a way that is meaningful and useful. This is particularly important in sectors such as healthcare, finance, and defense, where AI decisions can have significant consequences.

By providing an explanation for its actions, an AI system can help users understand why a particular decision was made, whether it was the right decision, and how to correct it if it was not. Explainability also helps ensure that AI is ethical, providing an advantage in the face of technical or legal challenges.

In this article:

Four Principles of Explainable AI

These four principles are based on a recent publication by the National Institute of Standards and Technology (NIST).

An AI System Should Provide Evidence or Reasons for All Its Outputs

Every decision made by an AI system should be backed by clear, tangible evidence. This evidence should be easily accessible and understandable to human users, enabling them to understand why the AI system made a specific decision.

AI systems often make decisions that can have serious consequences. For example, an AI system might be used to diagnose diseases, approve loans, or predict stock market trends. In such scenarios, it is crucial that the AI system can provide clear evidence for its decisions. This increases trust in the system and allows users to challenge decisions they believe are incorrect.

Explanations of AI Systems Should Be Understandable by Individual Users

An AI system should not just provide technical explanations that are only understandable to AI experts. It should provide explanations that are simple and intuitive, making them accessible to all users regardless of their technical expertise.

This is particularly important in sectors where AI is used by non-technical users. For example, in healthcare, AI systems are often used by doctors and nurses who may not have a deep understanding of AI.

Explanations Should Correctly Reflect a System’s Processes for Generating Outputs

An AI system must not provide a post-hoc rationalization of its decisions. It should provide an accurate depiction of its decision-making process, explaining how it arrived at a specific decision.

This principle ensures that the explanations provided by the AI system are truthful and reliable. It prevents the AI system from providing misleading or false explanations, which could lead to incorrect decisions and a loss of trust in the system. By providing accurate explanations, the AI system can help users understand its decision-making process, increasing their confidence in its decisions.

Systems Should Only Operate Under the Conditions for Which They Were Designed

An AI system should not be used in scenarios that are outside its area of expertise. For example, an AI system designed to diagnose skin cancer should not be used to diagnose heart disease.

This principle ensures that the AI system is used appropriately, reducing the likelihood of incorrect decisions. It also prevents the AI system from being used in situations where it is not capable of providing reliable and accurate decisions.

Related content: Read our guide to explainable AI tools

Challenges in Implementing XAI

While explainability is important, achieving explainable AI can be challenging. Let’s explore the reasons for this.

Balancing Complexity and Explainability

AI systems often rely on complex algorithms to make decisions. While these algorithms can provide highly accurate decisions, they can also be difficult to understand and explain. This can lead to a trade-off between complexity and explainability, where increasing the complexity of the AI system can reduce its explainability.

Managing Trade-Offs Between Performance and Transparency

In some cases, making an AI system more transparent can reduce its performance. For example, adding elements to an AI algorithm to make it more explainable might reduce its inference speed or make it more computationally intensive. This can lead to a difficult decision, where developers must decide between creating a high-performing AI system or a transparent one.

Ethical and Privacy Considerations in Explanations

In some cases, providing detailed explanations of an AI system’s decisions can reveal sensitive information. For example, an AI system might use personal data to make decisions, and explaining these decisions could reveal this data. This raises important ethical and privacy questions, which must be carefully considered when implementing XAI.

How to Implement the Explainable AI Principles: Design Guidelines for Explainable AI

Here are some design principles that can be applied to AI to ensure an effective, explainable system.

Consider User Needs and Expectations When Designing AI Systems

The end user should be the focus during the design process. This principle advocates for human-centric AI that is designed to serve its users effectively. Understanding user needs and expectations assists in identifying the tasks that the AI system should perform and the optimal way to execute those tasks.

Trust is a critical factor in the adoption of AI. If an AI system behaves in unpredictable or unexplainable ways, it can lead to mistrust and rejection. Therefore, considering user expectations helps ensure that the AI system’s workings align with what its users can understand and anticipate.

Prioritizing the user also helps in establishing ethical guidelines during the AI design process. AI should be designed to respect users’ privacy, uphold their rights, and promote fairness and inclusivity.

Clearly Communicate the Capabilities and Limitations of the AI System

Users should be able to understand what an AI system can do, what it can’t do, and how accurately it can perform its tasks. Clear communication about the AI system’s capabilities helps users leverage the system effectively.

Communicating the limitations of the AI system is equally important. No AI system is perfect, and it’s crucial for users to be aware of the system’s limitations to avoid misuse or overreliance. For instance, an AI system might be useful in identifying patterns in large datasets but might not be as effective in making predictions in scenarios where the data is sparse or inconsistent.

Design AI Systems to Acknowledge and Handle Errors or Uncertainties

It’s important to build a system that can deal with the inherent uncertainties of AI and potential errors. An AI system must be able to recognize and communicate these uncertainties to its users. For instance, an AI system that predicts weather should communicate the level of uncertainty in its predictions.

AI systems should also have mechanisms to handle errors effectively. This could involve designing the AI system to learn from its mistakes, or providing users with the option to correct the AI system when it makes an error. This feedback can improve the AI system’s performance over time.

AI systems should also be designed to handle unexpected scenarios or inputs gracefully. An AI system should not crash or produce nonsensical outputs when faced with unexpected situations. Instead, it should be able to handle these situations in a way that preserves its functionality and maintains user trust.

Ensure Transparency in How Data Is Gathered, Processed, and Used

Transparency in data gathering involves informing users about what data the AI system collects, why it collects this data, and how it uses this data. This is important for respecting user privacy and for building trust in the AI system.

Another important factor is explaining the algorithms or models that the AI system uses, and how these algorithms make decisions or predictions based on the data. It’s also important to explain how the AI system learns from the data over time. This includes detailing how the AI system updates its models or algorithms based on new data, and how these updates might influence the AI system’s outputs.

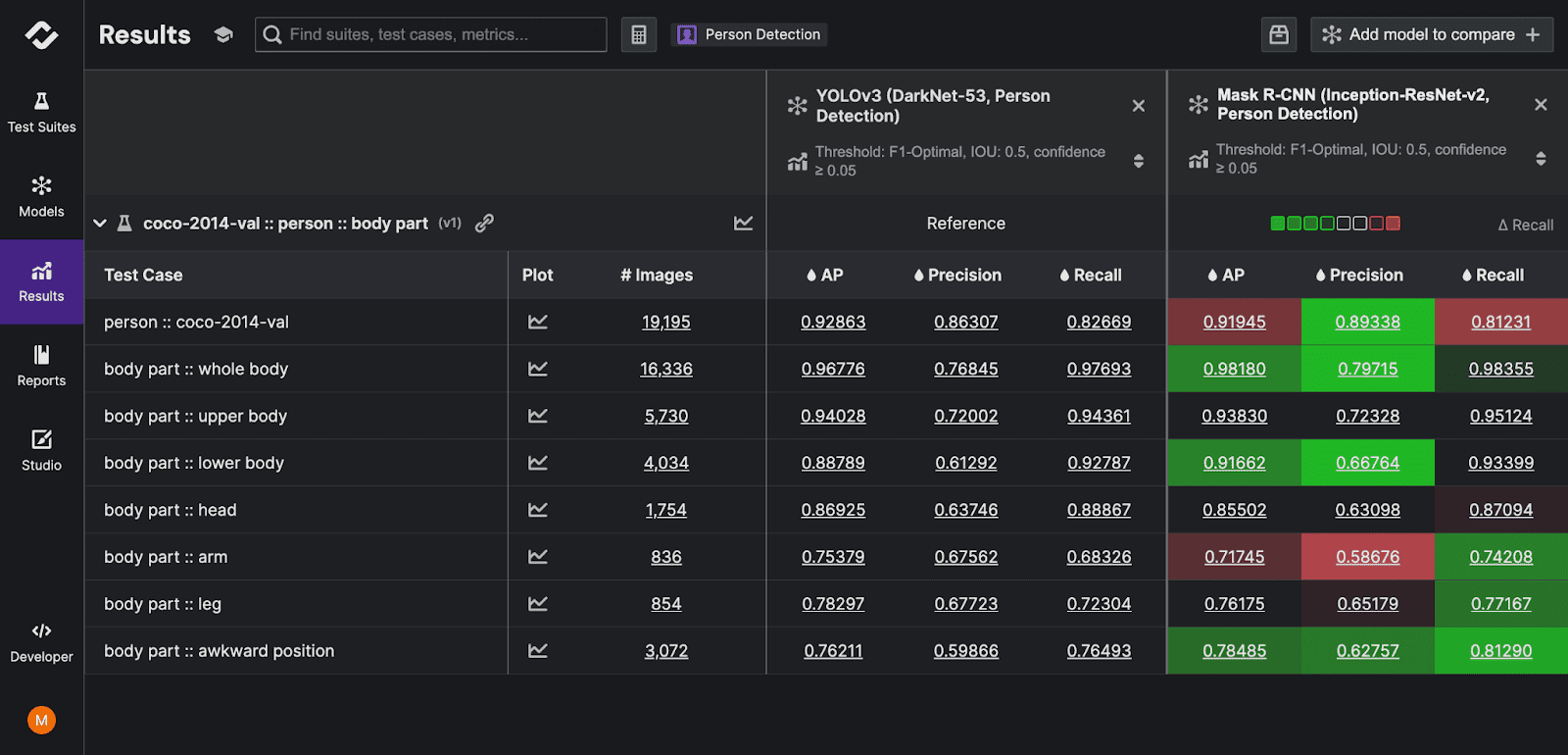

Explainable AI with Kolena

What if we want all the features of those explainable AI tools in one tool?

Kolena is a machine learning testing and validation platform that solves one of AI’s biggest problems: the lack of trust in model effectiveness. The use cases for AI are enormous, but AI lacks trust from both builders and the public. It is our responsibility to build that trust with full transparency and explainability of ML model performance, not just from a high-level aggregate ‘accuracy’ number, but from rigorous testing and evaluation at scenario levels.

With Kolena, machine learning engineers and data scientists can uncover hidden machine learning model behaviors, easily identify gaps in the test data coverage, and truly learn where and why a model is underperforming, all in minutes, not weeks. Kolena’s AI/ML model testing and validation solution helps developers build safe, reliable, and fair systems by allowing companies to instantly stitch together razor-sharp test cases from their data sets, enabling them to scrutinize AI/ML models in the precise scenarios those models will be unleashed upon the real world. Kolena platform transforms the current nature of AI development from experimental into an engineering discipline that can be trusted and automated.