What Are Explainable AI Tools?

Explainable AI refers to methods and techniques in the application of artificial intelligence that offer insights into the decision-making process of AI systems. These tools aim to describe the internal mechanics of an AI model in a way that is easily understood by humans.

The primary goal of explainable AI tools is to create transparency around AI decision-making processes. The ability to understand and interpret AI algorithms is crucial for ensuring the trustworthiness and reliability of AI systems. With these tools, it is possible to understand how and why a particular decision or prediction has been made by an AI system.

Explainable AI tools also play a significant role in improving the overall performance of these models. By providing insights into the AI decision-making process, these tools can help identify and rectify flaws within the model, thereby enhancing its accuracy and reliability.

Key Features of Explainable AI Tools and Frameworks

Model Interpretability

Model interpretability refers to the ability to understand the internal workings of an AI model and explain how it makes decisions. This feature is particularly important as it helps build trust and confidence in AI systems, especially in fields such as healthcare and finance where decisions made by AI can have significant consequences.

Interpretability also aids in the improvement of AI models. By understanding how an AI model makes decisions, it becomes possible to identify and address any weaknesses or biases inherent in the model. This can lead to the development of more robust and reliable AI systems.

Visual Explanations

Explainable AI tools use visual aids such as graphs and charts to represent the decision-making process of an AI model. Visual explanations make it easier for humans to understand complex AI models by breaking down their decision-making processes into more digestible parts.

Visual explanations can also aid in identifying patterns and trends within the AI model’s decision-making process. This can prove invaluable in understanding how the model behaves under different circumstances and can help in fine-tuning its performance.

Model Debugging

Model debugging is a feature of explainable AI tools that allows for the identification and addressing of issues within an AI model. Just like software debugging, model debugging involves finding and fixing faults within the AI model to ensure its optimal performance.

Debugging is crucial as it helps ensure the reliability and accuracy of the AI model. By identifying and addressing issues within the model, it becomes possible to improve its overall performance and reduce the likelihood of erroneous decisions or predictions.

Counterfactual Explanations

Counterfactual explanations help understand the decision-making process of an AI model by exploring what-if scenarios. These tools provide insights into how the outcome of a decision or prediction would change if certain input variables were altered.

Counterfactual explanations can be particularly helpful in fields such as healthcare and finance where decisions made by AI systems can have significant implications. By exploring different what-if scenarios, it becomes possible to understand the rationale behind the AI model’s decisions and make more informed choices.

Sensitivity Analysis

Sensitivity analysis helps understand the effect of changes in input variables on the output of an AI model. This feature provides insights into which input variables have the most impact on the model’s decisions or predictions.

Sensitivity analysis can be particularly useful in improving the performance of AI models. By identifying which input variables have the most impact on the model’s output, it becomes possible to focus on these variables when training the model. This can also lead researchers to improve data quality for those specific variables.

ML Testing

Machine learning (ML) testing is a feature of explainable AI tools that helps ensure the accuracy and reliability of AI models. This feature involves testing the model’s performance under different conditions to ensure it is functioning as expected.

ML testing is crucial for ensuring the trustworthiness of AI systems. By testing the model under different conditions, it becomes possible to identify and address any issues, thereby ensuring the model’s optimal performance.

ML Validation

Machine learning validation helps confirm the accuracy and reliability of an AI model. This feature involves validating the model’s performance against a set of predefined criteria to ensure it is functioning as expected.

ML validation is crucial for building trust and confidence in AI systems. By confirming that the model meets the set criteria, it becomes possible to ensure its reliability and dependability.

Free and Open Source Explainable AI Tools

Let’s review the most common free tools you can use to perform explainable AI.

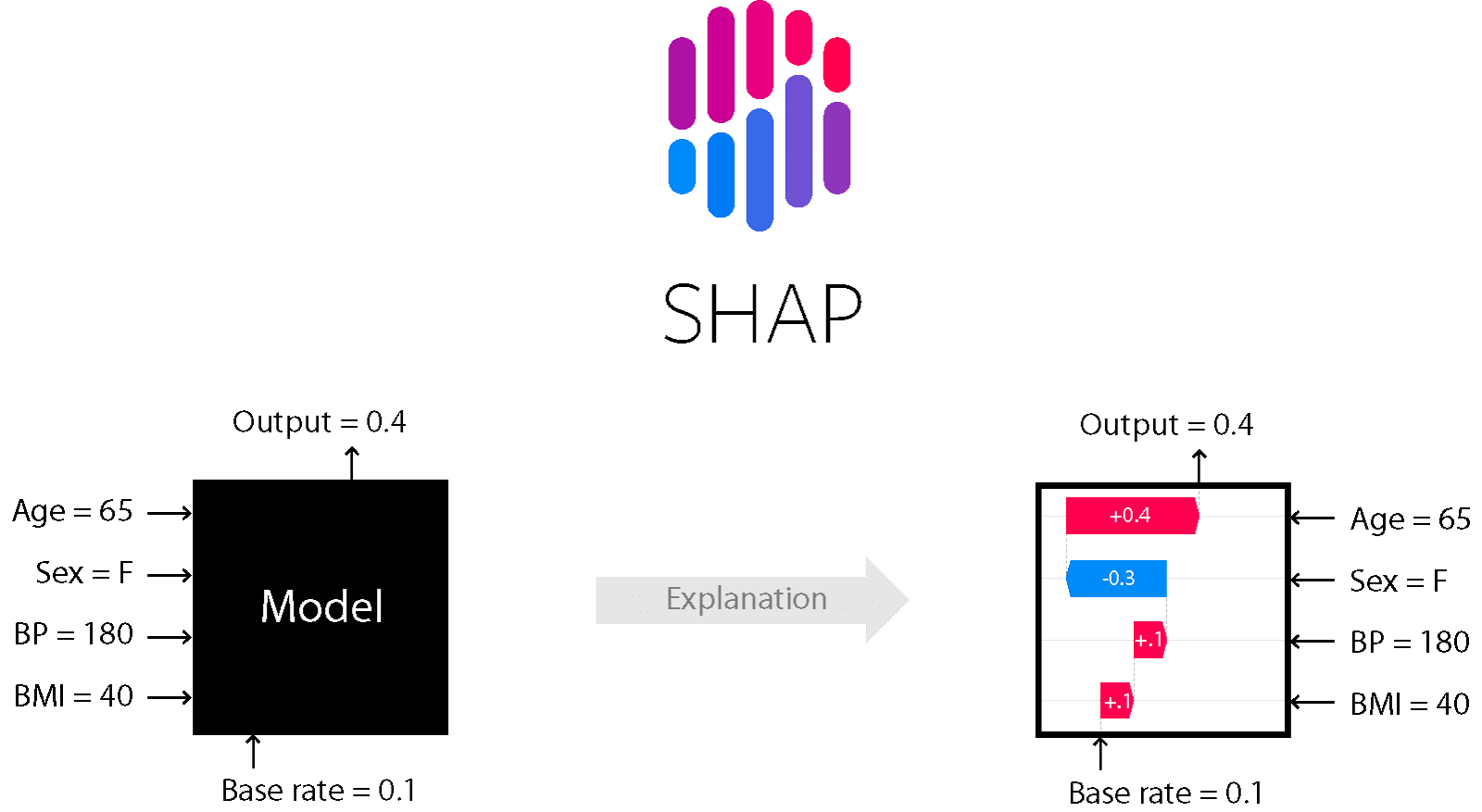

1. SHAP

GitHub repo: https://github.com/shap/shap

The SHAP algorithm (Shapley Additive exPlanations, Lundberg and Lee, 2017) is an explainable AI framework that has been widely accepted and adopted in various fields. The paper focused on why a model made a particular prediction, and stated that optimal levels of accuracy are only achieved with complex models, creating a tension between accuracy and interpretability.

Source: SHAP

SHAP uses a game theoretic approach to predict the outcome of any machine learning model. With the help of classic shapley values, SHAP connects optimal credit allocation with local explanations. SHAP values can be used to interpret any machine learning model, including:

- Linear regression

- Decision trees and random forests

- Gradient boosting models

- Neural networks

2. LIME

Github repo: https://github.com/marcotcr/lime

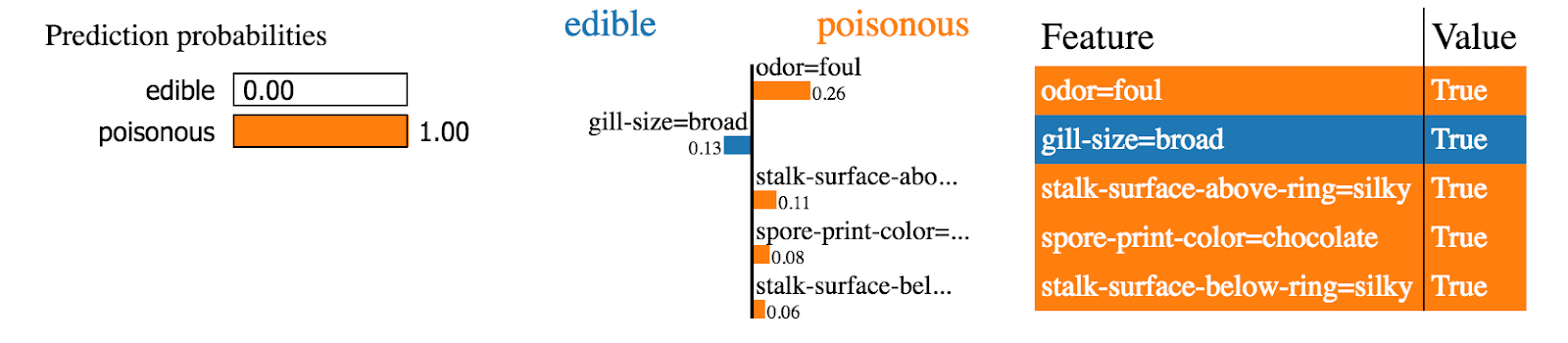

Developed by researchers at the University of Washington (Ribeiro et al, 2016), LIME, also known as Local Interpretable Model-Agnostic Explanations, is a technique that promotes greater transparency within algorithms. It aims to simplify the interpretation of any multi-class black box classifier.

LIME enables conceptualization and optimization of ML models through understandability. It caters to specific understandability criteria linked to domains and tasks. Additionally, its modular approach yields reliable, in-depth predictions from models.

Source: LIME

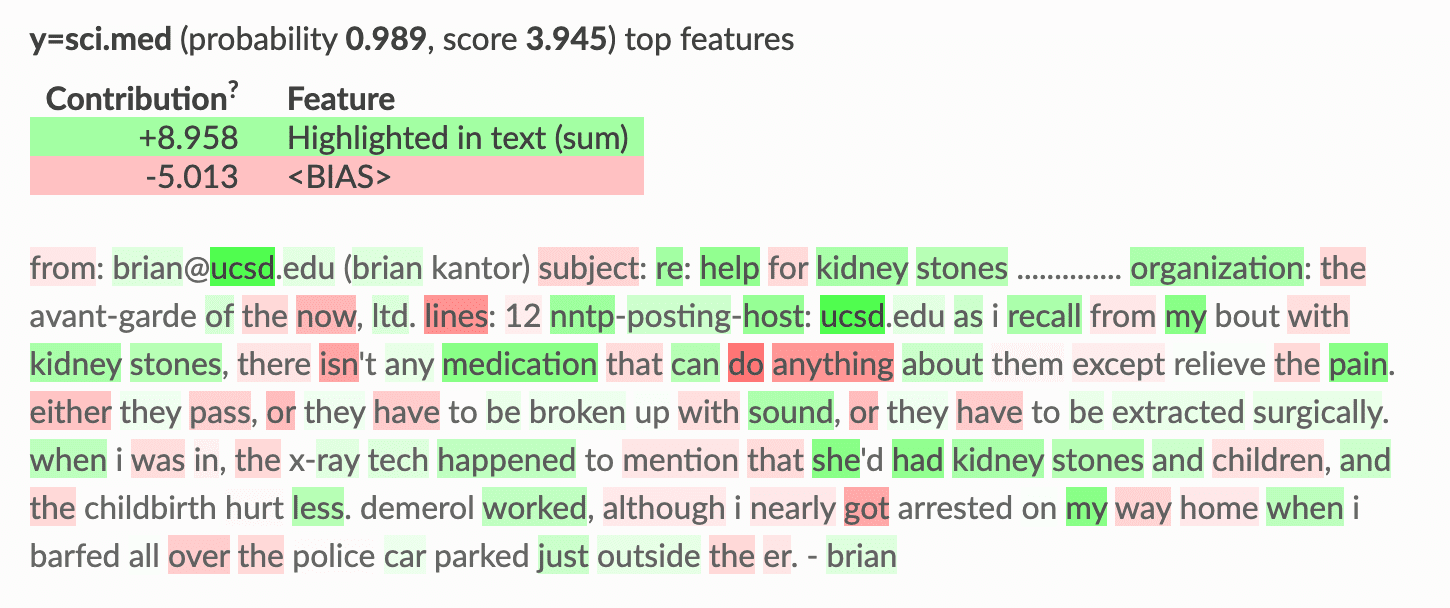

3. ELI5

GitHub repo: https://github.com/TeamHG-Memex/eli5

ELI5 is a Python package which helps to debug machine learning classifiers and explain their predictions. Below is an example of how ELI shows features and their contribution to model predictions, overlaid on the training data.

ELI provides support for the following machine learning frameworks and packages:

- scikit-learn: ELI5 explains weights and predictions of scikit-learn linear classifiers and regressors, prints decision trees as text or as SVG, shows feature importances and explains predictions of decision trees and tree-based ensembles.

- Keras: Explains predictions of image classifiers via Grad-CAM visualizations.

- XGBoost: Shows feature importance and explains predictions of XGBClassifier, XGBRegressor and xgboost.Booster.

- LightGBM: Shows feature importance and explain predictions of LGBMClassifier and LGBMRegressor.

- CatBoost: Shows feature importance for CatBoostClassifier and CatBoostRegressor.

- Lightning: Explains weights and predictions of lightning classifiers and regressors.

- sklearn-crfsuite: Checks weights of sklearn_crfsuite.CRF models.

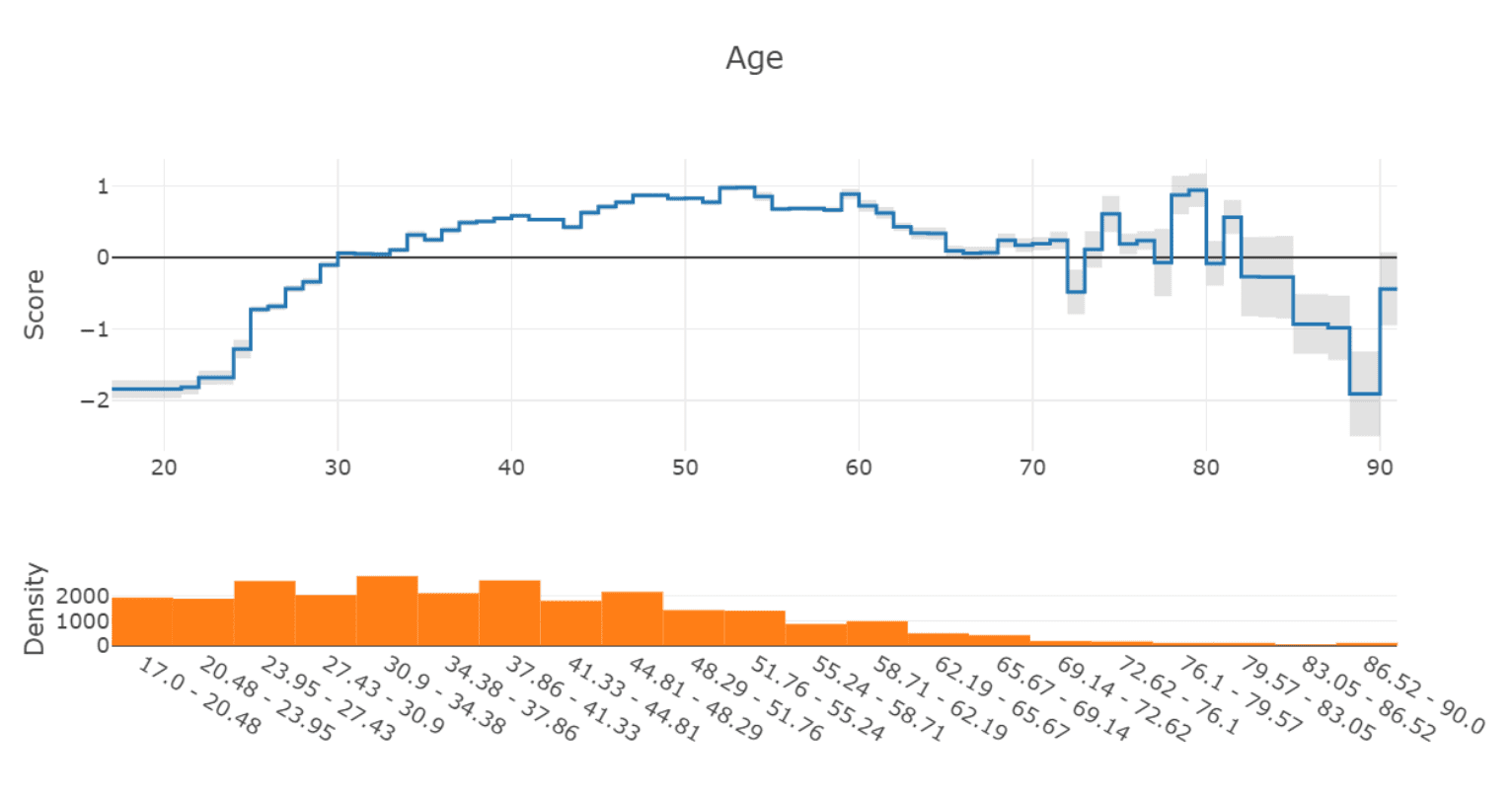

4. InterpretML

GitHub repo: https://github.com/interpretml/interpret

InterpretML is a freely accessible Python library, which supports two approaches for AI explainability:

- Glassbox models: Machine learning frameworks purposely designed for their interpretability and include examples such as rule lists, linear models, and generalized additive models.

- Blackbox techniques: like LIME and Partial Dependence, which help to understand pre-existing models.

Key features include:

- Comparison between different interpretability algorithms by making multiple methods available through a single, standardized API.

- Built-in, extensible visualization platform.

- Novel implementation of the Explainable Boosting Machine, a robust, interpretable glassbox model comparable in precision to numerous blackbox models.

5. AI Explainability 360

GitHub repo: https://github.com/Trusted-AI/AIX360

AI Explainability 360 is a freely accessible Python package, the multiple algorithms that provide various aspects of ML algorithm explanations, enhanced by proxy explainability metrics for improved understanding. Key features include support for tabular, text-based, image, and time series data, an intuitive graphical user interface, and step-by-step walkthrough of typical use cases.

Supported explainability algorithms include:

- Data explanations: Including ProtoDash, Disentangled Inferred Prior VAE)

- Local post-hoc explanations: Including Contrastive Explanations Method, Contrastive Explanations Method with Monotonic Attribute Functions.

- Time-Series local post-hoc explanations: Including Time Series Saliency Maps using Integrated Gradients, Time Series LIME.

- Local direct explanations: Including Teaching AI to Explain its Decisions, Order Constraints in Optimal Transport.

- Global direct explanations: Including Interpretable Model Differencing, CoFrNets.

- Global post-hoc explanations: Including ProfWeight

- Explainability metrics: Faithfulness, Monotonicity

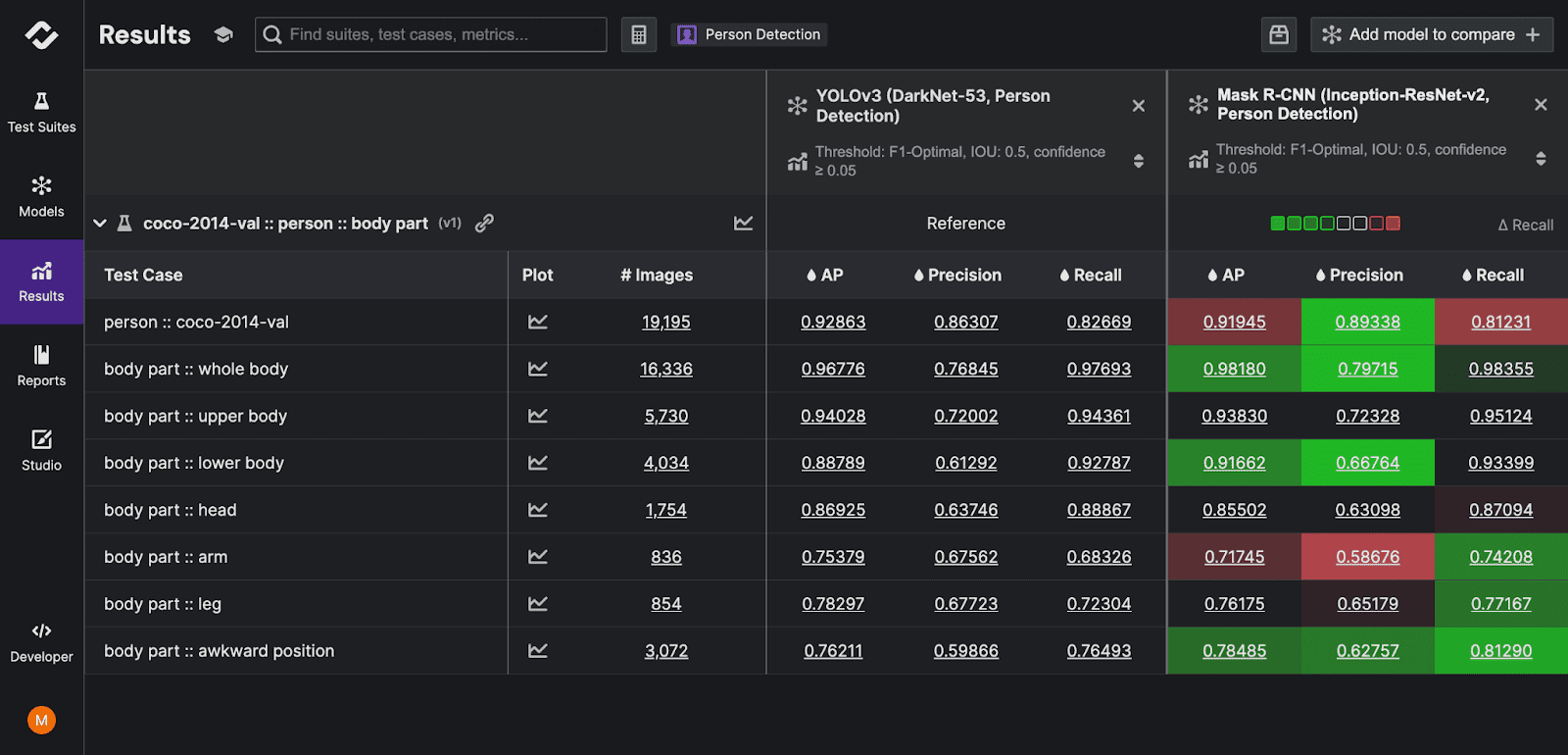

Explainable AI with Kolena

What if we want all the features of those explainable AI tools in one tool?

Kolena is a machine learning testing and validation platform that solves one of AI’s biggest problems: the lack of trust in model effectiveness. The use cases for AI are enormous, but AI lacks trust from both builders and the public. It is our responsibility to build that trust with full transparency and explainability of ML model performance, not just from a high-level aggregate ‘accuracy’ number, but from rigorous testing and evaluation at scenario levels.

With Kolena, machine learning engineers and data scientists can uncover hidden machine learning model behaviors, easily identify gaps in the test data coverage, and truly learn where and why a model is underperforming, all in minutes, not weeks. Kolena’s AI/ML model testing and validation solution helps developers build safe, reliable, and fair systems by allowing companies to instantly stitch together razor-sharp test cases from their data sets, enabling them to scrutinize AI/ML models in the precise scenarios those models will be unleashed upon the real world. Kolena platform transforms the current nature of AI development from experimental into an engineering discipline that can be trusted and automated.