What Is the Anthropic Claude API?

Claude is a large language model (LLM) developed by Anthropic, an AI safety and research company. It processes and generates human-like text based on the input it receives. It is considered one of the most advanced LLMs on the market, beating contenders like OpenAI GPT-4 and Google Gemini on several benchmarks.

Anthropic places a special focus on safety and steerability, ensuring that outputs are aligned with human intentions. Claude incorporates mechanisms to better understand context and reduce harmful or undesired outputs.

The Claude API is a programming interface provided by Anthropic that allows developers to integrate the capabilities of the Claude language model into their applications. The API allows applications to send text input to the model and receive generated responses in real-time.

Developers can access the API through a Python or TypeScript SDK or simple HTTP requests, making it compatible with a wide range of programming languages and development environments. Additionally, the API supports features such as adjustable response length, temperature settings for controlling the creativity of the outputs, and context management to maintain the coherence of multi-turn conversations.

Quick Tutorial: Getting Started with the Anthropic Claud Python and TypeScript SDK

Let’s walk through a quick tutorial showing how to use the Anthropic API to build applications with Claude.

Prerequisites

Before you begin, ensure you have the following:

- An Anthropic Console account

- An API key

- Python 3.7+ or TypeScript 4.5+

Anthropic provides SDKs for Python and TypeScript, although you can also make direct HTTP requests to the API.

Start with the Workbench

The Workbench is a web-based interface to Claude where you can prototype and test API calls (similar to the OpenAI Playground).

1. Log into the Anthropic Console and click on the Workbench.

2. Ask Claude a question in the User section.

User: How big is the Earth?3. Click Run to see the response.

Response: The Earth has a diameter of approximately 12,742 kilometers (7,926 miles) at the equator. Its circumference…

You can control the format, tone, and personality of Claude’s response using a System Prompt.

4. Add a System Prompt to change the response style.

System prompt: You are a rapper. Respond only with rhyming verses.

5. Click Run again to see the modified response.

Response: Yo, check it, Earth's a sphere, spinning through the stars

Its size ain't small, but it ain't too far

...

Once satisfied with the setup, you can convert your Workbench session into code.

Install the SDK

Anthropic provides SDKs for both Python and TypeScript.

For Python:

1. Create a virtual environment in your project directory.

python3 -m venv claude-envIf virtual environment is not installed on your system, you can use the following command to install it:

apt install python3.10-venv2. Activate the virtual environment:

On macOS or Linux:

source claude-env/bin/activateOn Windows:

claude-env\Scripts\activate3. Install the Anthropic SDK:

pip3 install anthropicSet Your API Key

Set your API key as an environment variable. Replace ‘your-api-key-here’ with your actual API key.

On macOS and Linux:

export ANTHROPIC_API_KEY='your-api-key-here'On Windows:

set ANTHROPIC_API_KEY='your-api-key-here'Call the API with Python SDK

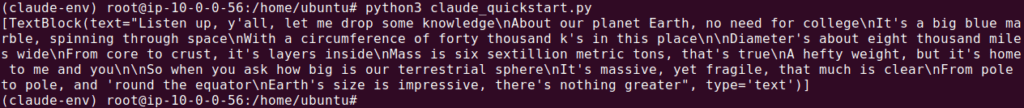

Create a Python script to call the API. Here’s an example script claude_quickstart.py:

import anthropic

client = anthropic.Anthropic()

message = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1000,

temperature=0,

system="You are a rapper. Respond only with rhyming verses.",

messages=[

{

"role": "user",

"content": "How big is the Earth?"

}

]

)

print(message.content)Run the script:

python3 claude_quickstart.pyResponse:

Call the API with TypeScript SDK

Here is a code example showing how to do the same with the TypeScript SDK:

import Anthropic from '@anthropic-ai/sdk';

const client = new Anthropic({

apiKey: process.env['ANTHROPIC_API_KEY'], // This is the default and can be omitted

});

async function main() {

const params: Anthropic.MessageCreateParams = {

max_tokens: 1024,

messages: [{ role: 'user', content: 'Wassup, Claude' }],

model: 'claude-3-opus-20240229',

};

const message: Anthropic.Message = await client.messages.create(params);

}

main();Examples Using the Claude API

These examples are adapted from the Anthropic documentation.

Create a Message

To create a message using the Claude API, you can send a structured list of input messages. These messages can contain text and/or image content, and the model will generate the next message in the conversation. The Messages API supports both single queries and stateless multi-turn conversations.

Endpoint: POST /v1/messages

Request Body: application/json

model:string (required)- The model that will complete your prompt. See models for additional details and options.

messages:object[] (required)- Input messages structured as alternating user and assistant conversational turns. Specify prior conversational turns with the messages parameter, and the model generates the next message in the conversation.

- Each input message must be an object with a role and content. The first message must always use the user role.

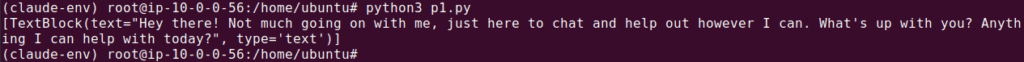

Example with a Single User Message:

{

"model": "claude-3-5-sonnet-20240620",

"messages": [

{

"role": "user",

"content": "Wassup, Claude"

}

]

}Response:

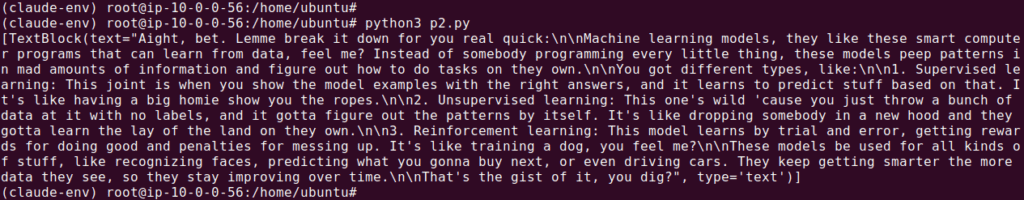

Example with Multiple Conversational Turns:

{

"model": "claude-3-5-sonnet-20240620",

"messages": [

{

"role": "user",

"content": "Wassup, Claude."

},

{

"role": "assistant",

"content": "Hello! How can I assist you today??"

},

{

"role": "user",

"content": "Can you describe machine learning models in AAVE?"

}

]

}Response:

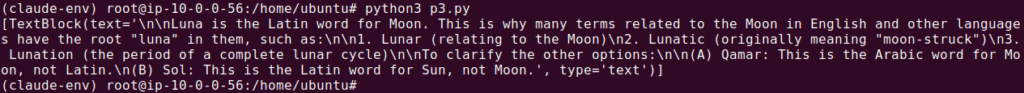

Example with a Partially-Filled Response from Claude:

{

"model": "claude-3-5-sonnet-20240620",

"messages": [

{

"role": "user",

"content": "What's the Latin word for Moon? (A) Qamar (B) Sol (C) Luna"

},

{

"role": "assistant",

"content": "The correct answer is: (C) Luna"

}

]

}

Response:

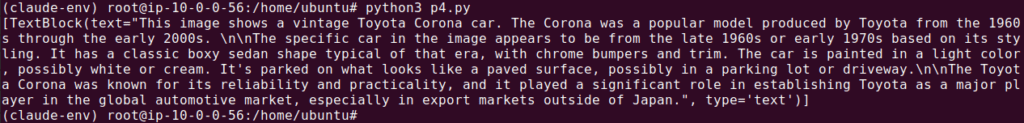

Example with an Image and Text Content:

{

"model": "claude-3-5-sonnet-20240620",

"messages": [

{

"role": "user",

"content": [

{

"type": "image",

"source": {

"type": "base64",

"media_type": "image/jpeg",

"data": "/9j/4AAQSkZJRg..."

}

},

{

"type": "text",

"text": "What is in this image?"

}

]

}

]

}

Response:

For image content blocks, the base64 source type is supported, along with the image/jpeg, image/png, image/gif, and image/webp media types.

Note: To include a system prompt, use the top-level system parameter, as there is no “system” role for input messages in the Messages API.

Streaming Messages

Streaming messages in the Claude API enable real-time responses using server-sent events (SSE). This feature is particularly useful when you need to handle incremental responses from the model, allowing applications to process and display outputs as they are generated.

Anthropic provides support for streaming responses through both Python and TypeScript SDKs. This section will demonstrate how to implement streaming in Python.

Python Example

To stream messages in Python, you can use the stream method provided by the anthropic SDK. Here’s how to set it up:

1. Import the necessary modules:

import anthropic2. Initialize the client:

client = anthropic.Anthropic()3. Create a streaming request:

with client.messages.stream(

max_tokens=1024,

messages=[{"role": "user", "content": "Hello"}],

model="claude-3-5-sonnet-20240620",

) as stream:

for text in stream.text_stream:

print(text, end="", flush=True)This script sets up a streaming connection to the Claude model. The stream method begins an SSE connection and iterates over the text stream, printing each part of the response as it arrives.

Event Types

Each SSE includes an event type and corresponding JSON data. Here are the main events you will encounter:

- message_start: Indicates the start of a message, with an empty content field.

- content_block_start, content_block_delta, content_block_stop: Manage the incremental updates of content blocks within the message.

- message_delta: Represents top-level changes to the message object.

- message_stop: Marks the end of the message.

- ping: Periodic events to keep the connection alive.

- error: Indicates errors, such as overloaded_error for high usage periods.

Example Error Event

If an error occurs, it will look like this:

event: error

data: {"type": "error", "error": {"type": "overloaded_error", "message": "Overloaded"}}Delta Types

Each content_block_delta event includes a delta, which updates a specific content block. For example:

Text delta:

event: content_block_delta

data: {"type": "content_block_delta", "index": 0, "delta": {"type": "text_delta", "text": "ello frien"}}Input JSON delta: Used for tool-use content blocks.

event: content_block_delta

data: {"type": "content_block_delta", "index": 1, "delta": {"type": "input_json_delta", "partial_json": "{\"location\": \"San Fra\"}"}}To handle these deltas, accumulate the partial JSON strings and parse them once the content_block_stop event is received.

Using the Claude API: Things You Need to Know

Versions

When making API requests, you must include the anthropic-version request header, such as anthropic-version: 2023-06-01. If you’re using the provided client libraries, this header is automatically handled for you.

For any major API version, the following aspects are preserved:

- Existing input parameters

- Existing output parameters

Anthropic may introduce the following in minor version updates:

- Additional optional inputs

- New values in the output

- Updated conditions for specific error types

- New variants for enum-like output values (e.g., streaming event types)

Errors

The Claude API follows a predictable HTTP error code format:

- 400 – invalid_request_error: Issues with the format or content of your request.

- 401 – authentication_error: Issues with your API key.

- 403 – permission_error: Your API key lacks the necessary permissions.

- 404 – not_found_error: The requested resource is not found.

- 413 – request_too_large: The request exceeds the allowed size.

- 429 – rate_limit_error: Your account has exceeded the rate limit.

- 500 – api_error: Unexpected internal errors within Anthropic’s systems.

- 529 – overloaded_error: The API is temporarily overloaded.

Errors are returned as JSON, with a top-level error object containing type and message values. For example:

{

"type": "error",

"error": {

`"type": "not_found_error",`

`"message": "The requested resource could not be found."`

}

}While the API versioning policy may expand the values within these objects, the core format remains consistent.

Rate Limits

The Claude API imposes two types of limits on usage:

- Usage limits: Maximum monthly cost an organization can incur.

- Rate limits: Number of API requests an organization can make over a specific period.

These limits are based on usage tiers, which automatically increase as you reach certain thresholds. Limits are set at the organization level and are visible in the Plans and Billing section of the Anthropic Console.

Rate limits are measured in requests per minute (RPM), tokens per minute (TPM), and tokens per day (TPD) for each model class. If you exceed these limits, you will receive a 429 error.

| Model Tier | Requests per minute (RPM) | Tokens per minute (TPM) | Tokens per day (TPD) |

| Claude 3.5 Sonnet | 5 | 20,000 | 300,000 |

| Claude 3 Opus | 5 | 10,000 | 300,000 |

| Claude 3 Sonnet | 5 | 20,000 | 300,000 |

| Claude 3 Haiku | 5 | 25,000 | 300,000 |

AI Testing & Validation with Kolena

Kolena is an AI/ML testing & validation platform that solves one of AI’s biggest problems: the lack of trust in model effectiveness. The use cases for AI are enormous, but AI lacks trust from both builders and the public. It is our responsibility to build that trust with full transparency and explainability of ML model performance, not just from a high-level aggregate ‘accuracy’ number, but from rigorous testing and evaluation at scenario levels.

With Kolena, machine learning engineers and data scientists can uncover hidden machine learning model behaviors, easily identify gaps in the test data coverage, and truly learn where and why a model is underperforming, all in minutes not weeks. Kolena’s AI / ML model testing and validation solution helps developers build safe, reliable, and fair systems by allowing companies to instantly stitch together razor-sharp test cases from their data sets, enabling them to scrutinize AI/ML models in the precise scenarios those models will be unleashed upon the real world. Kolena platform transforms the current nature of AI development from experimental into an engineering discipline that can be trusted and automated.