What Is the Transformer Model?

A transformer model is a type of neural network architecture that can handle sequential data, mainly for use in natural language processing (NLP) tasks. Unlike traditional recurrent neural networks (RNNs), transformers do not process data in a sequence but instead use a mechanism called self-attention.

The self-attention mechanism allows the model to weigh the importance of different parts of the input data simultaneously, rather than sequentially, leading to more efficient training and better performance on tasks involving long-range dependencies.

The transformer architecture was introduced in the paper “Attention is All You Need” (Vaswani et al., 2017), and it has since transformed the field of NLP by enabling more effective and scalable language models.

This is part of a series of articles about generative models

How Are Transformers Different from Other Neural Network Architectures?

Let’s see how the transformer architecture compares to other types of neural networks.

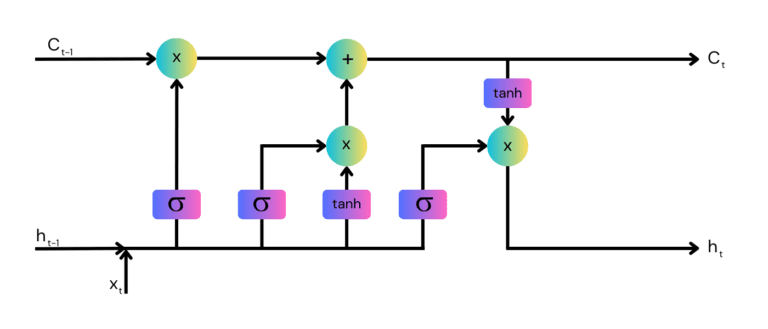

Transformers vs RNNs

RNNs process data sequentially, which can lead to difficulties in capturing long-range dependencies due to the vanishing gradient problem. This sequential processing also results in longer training times. RNNs often require the explicit alignment of sequences, making them more limited.

Transformers use self-attention mechanisms that allow for parallel processing of data. This parallelism speeds up training and enables the model to capture dependencies across the entire input sequence more effectively. Additionally, transformers can handle variable-length inputs without the need for explicit sequence alignment.

Transformers vs CNNs

Convolutional Neural Networks (CNNs) are useful for tasks involving spatial hierarchies, such as image processing. However, they have limitations in capturing long-range dependencies due to their localized receptive fields.

Transformers can model relationships between distant elements in the data, making them more suitable for tasks where global context is important. In image processing, Vision Transformers (ViTs) have shown that self-attention mechanisms can be applied to image patches, treating them as sequences, and achieve competitive performance with CNNs. This allows transformers to capture details across an entire image.

The Impact of Transformers: How Did Transformers Pave the Way to Modern LLMs?

Before transformers, machine learning models struggled with capturing long-range dependencies and parallel processing of data. Transformers overcame these limitations with their self-attention mechanism, which allows models to weigh the importance of different parts of the input data simultaneously. This innovation led to significant improvements in the efficiency and performance of language models.

Large language models (LLMs), such as OpenAI’s GPT series and Google’s BERT, use the transformer architecture to handle vast amounts of text data. The ability to pre-train on extensive text corpora and fine-tune on specific tasks has enabled LLMs to achieve results across a variety of NLP benchmarks and real-world applications.

Transformers have also set a new standard for scalability in AI models. Their architecture is well-suited for parallel processing on modern hardware, making it feasible to train models with billions or even trillions of parameters. This scalability allows LLMs to handle increasingly complex tasks and deliver more accurate and contextually relevant outputs.

Common Applications of the Transformer Model

Transformer models have many use cases across various industries. Here are some of the most common applications:

- Machine translation: Transformer models can understand and generate text in multiple languages with high accuracy. Unlike traditional sequence-to-sequence models, transformers can capture long-range dependencies in the input text, resulting in more coherent and contextually relevant translations.

- Text summarization: They condense lengthy documents into shorter, coherent summaries without losing essential information. This is useful for generating executive summaries, news briefs, and summarizing academic papers.

- Sentiment analysis: They can determine the emotional tone behind a body of text. This is done by evaluating the context in which words and phrases are used.

- Question answering: They can comprehend and generate context-aware responses. Transformers can read and understand large documents, identify relevant information, and provide precise answers to user queries.

- Autocompletion and predictive text: By predicting the next word or phrase based on the context of the preceding text, transformers can improve typing efficiency and accuracy.

How Does the Transformer Architecture Work?

Transformer models process input data through a series of layers that incorporate self-attention mechanisms and feedforward neural networks. The following diagram, based on the original Transformer paper, illustrates the architecture.

Source: ResearchGate

Here’s a step-by-step explanation of how the transformer architecture functions:

- Input embeddings: The first step involves converting the input data, such as a sentence, into numerical representations known as embeddings. These embeddings capture the semantic meaning of each token in the input sequence. They can be learned during training or derived from pre-trained word embeddings.

- Positional encoding: Since transformers do not process data sequentially, positional encoding is introduced to provide the model with information about the positions of tokens within the sequence. This involves adding patterns or vectors to the token embeddings, allowing the model to understand the order of the tokens.

- Multi-head attention: The self-attention mechanism operates in multiple “attention heads.” Each head captures different types of relationships between tokens by calculating attention weights. The self-attention mechanism uses softmax functions to determine these weights, enabling the model to focus on different parts of the input sequence simultaneously.

- Layer normalization and residual connections: To stabilize and accelerate training, transformer models normalize layers and use residual connections. Layer normalization helps to standardize the inputs to each layer, while residual connections allow gradients to flow through the network more effectively, preventing issues like vanishing gradients.

- Feedforward neural networks: After the self-attention layer, the output is passed through feedforward neural networks. These networks apply non-linear transformations to the token representations, enabling the model to capture complex patterns and relationships within the data.

- Output layer: For tasks such as neural machine translation, an additional decoder module is used. This decoder generates the output sequence based on the refined representations produced by the encoder layers.

- Training: Training transformer models involves supervised learning, where the model learns to minimize a loss function that quantifies the difference between its predictions and the actual ground truth. Common optimization techniques like Adam or stochastic gradient descent (SGD) are used during training.

- Inference: Once trained, the model can be used for inference on new data. During inference, the input sequence is fed through the pre-trained model to generate predictions or representations for the specific task at hand.

Types and Examples of Transformer Models

The transformer architecture includes the following types of models.

1. Bidirectional Transformers

Bidirectional transformers, such as BERT (Bidirectional Encoder Representations from Transformers), can read input data from both left and right contexts in all layers simultaneously. This architecture allows for a deep understanding of the context, improving performance on various NLP tasks such as question answering and name entity recognition. These models have set new standards in the accuracy of NLP applications by pre-training on a large corpus of text and then fine-tuning on specific tasks.

2. Generative Pretrained Transformers

Generative Pretrained Transformer (GPT) models, starting with GPT-1 and evolving through GPT-4, use an architecture optimized for understanding and generating human-like text. These models are pretrained on vast amounts of data and then fine-tuned for specific generation tasks. GPT models have demonstrated the ability to compose textual outputs that mimic human writing and achieve reasoning capabilities at near-human level, making them useful for applications like chatbots, data analysis, and content creation.

3. Bidirectional and Autoregressive Transformers

Models like XLNet combine the best of both worlds from bidirectional and autoregressive transformers. They capture bidirectional context by using permutations of the input sequence, which allows comprehensive contextual understanding like BERT, while also maintaining the autoregressive property of predicting the next word in the sequence like GPT. This dual approach helps in tackling a range of language understanding and generation tasks.

4. Transformers for Multimodal Tasks

Transformers have also been adapted for multimodal tasks that require processing different types of data simultaneously, such as integrating visual and textual information. These models, such as ViLBERT (Vision-and-Language BERT), process images and text in parallel through separate but interconnected streams, enabling tasks like image captioning and visual question answering. The latest generation of multimodal transformers includes leading commercial solutions like Google Gemini and OpenAI GPT-4o.

5. Vision Transformers

Vision Transformers (ViTs) apply the principles of transformers directly to images. By treating parts of images as sequences of pixels, ViTs utilize self-attention mechanisms to process visual information globally. This method contrasts with the local receptive fields used in CNNs. It is useful for tasks such as image classification, where capturing contextual relations across the entire image can lead to more accurate interpretations.

Related content: Read our guide to LLM vs NLP

AI Testing & Validation with Kolena

Kolena is an AI/ML testing & validation platform that solves one of AI’s biggest problems: the lack of trust in model effectiveness. The use cases for AI are enormous, but AI lacks trust from both builders and the public. It is our responsibility to build that trust with full transparency and explainability of ML model performance, not just from a high-level aggregate ‘accuracy’ number, but from rigorous testing and evaluation at scenario levels.

With Kolena, machine learning engineers and data scientists can uncover hidden machine learning model behaviors, easily identify gaps in the test data coverage, and truly learn where and why a model is underperforming, all in minutes not weeks. Kolena’s AI / ML model testing and validation solution helps developers build safe, reliable, and fair systems by allowing companies to instantly stitch together razor-sharp test cases from their data sets, enabling them to scrutinize AI/ML models in the precise scenarios those models will be unleashed upon the real world. Kolena platform transforms the current nature of AI development from experimental into an engineering discipline that can be trusted and automated.