What Is Zero-Shot Learning?

Zero-shot learning (ZSL) is a machine learning technique where a model learns to recognize objects, scenes, or concepts that it has never seen during training. It is distinguished from traditional machine learning methods as it does not require any labeled examples of the target classes during the training phase.

Instead, it uses knowledge of seen classes to infer about unseen classes through attributes or semantic relationships. This capability is particularly useful in situations where data labeling is expensive or impractical.

By leveraging attributes and related external information from known categories, zero-shot learning models extend their capability to unknown categories without additional data collection or manual label effort.

This is part of a series of articles about generative models

How Does Zero-Shot Learning Work?

Zero-shot learning operates through a combination of feature extraction, attribute mapping, and semantic relationships. The process generally involves the following steps:

- Feature extraction: During training, the model extracts features from images, text, or other data types of seen classes. These features can include visual aspects (like shapes and colors) or textual descriptors (like words and phrases).

- Attribute mapping: The model learns to map these features to a set of attributes or embeddings. Attributes are human-interpretable qualities (e.g., “has wings”, “is red”) shared between seen and unseen classes. Embeddings are dense vector representations capturing semantic relationships among classes.

- Training with seen classes: Using seen classes, the model learns the relationship between the extracted features and the corresponding attributes or embeddings. This step involves training on labeled data where each class’s attributes are known.

- Inference on unseen classes: When presented with an unseen class, the model uses the learned mappings to predict attributes or embeddings from the features of the new data. For example, given an image of an unseen animal, the model predicts its attributes based on what it learned from seen animals with similar features.

- Classification of unseen classes: The final step involves comparing the predicted attributes or embeddings against a predefined set for unseen classes. The model assigns the class label whose attributes or embeddings most closely match the predictions.

Related content: Read our guide to LLM vs NLP

The Role of Zero-Shot Learning in Large Language Models

Large language models (LLMs) leverage zero-shot learning to handle a vast array of tasks without task-specific training. This capability stems from the models’ pre-training on extensive and diverse text corpora, enabling them to understand and generate human-like text based on context alone.

By utilizing zero-shot learning, LLMs can perform tasks such as translation, summarization, question answering, and more by interpreting the task requirements from natural language instructions.

For example, given the prompt “Translate the following English sentence to Indonesian: ‘Hello, how are you?'”, an LLM can produce the correct translation without having been explicitly trained on that specific translation task. This flexibility is crucial for applications requiring adaptability to new and varied tasks, reducing the need for additional training data and enabling rapid deployment of AI solutions across different domains.

Types and Examples of Zero-Shot Learning

Zero-shot learning can be attribute-based, semantic embedding based, generalized, or multi-modal.

Attribute-Based Zero-Shot Learning

Attribute-based zero-shot learning (AZSL) relies on a predefined set of attributes common to seen and unseen classes. Each attribute corresponds to a visual or contextual quality that is observable and quantifiable. For example, in wildlife species identification, attributes could include color patterns, habitat, and physical features like horns or wings.

Using these attributes, AZSL models learn a function to predict these features for seen classes, abstracting the learning process away from specific class identities toward general attribute recognition. When encountering an unseen class, the model uses its learned attribute prediction capability to infer the class based on its attributes.

Semantic Embedding-Based Zero-Shot Learning

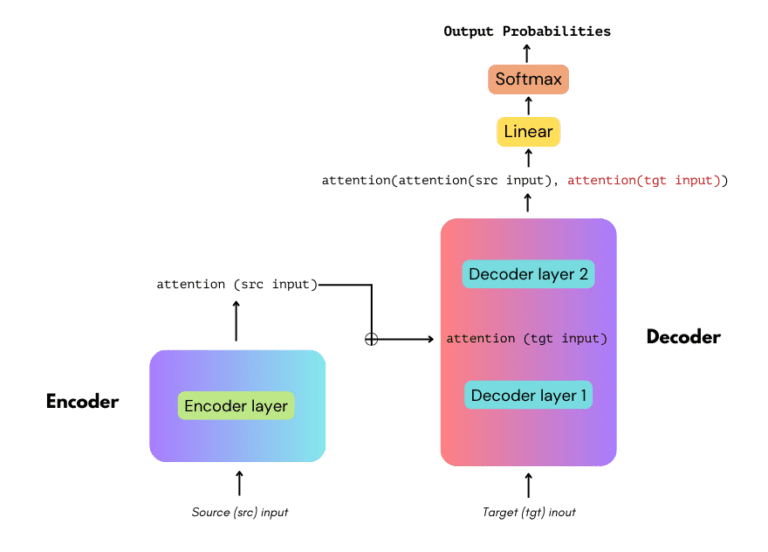

Semantic embedding-based zero-shot learning (SEZSL) uses embeddings or dense vector representations derived from the textual or symbolic descriptions of the classes. For example, using word vectors from natural language processing, classes are embedded into a semantic space that captures relationships between different classes based on their descriptions.

In SEZSL, a model learns to map visual features to these semantic embeddings during training. Upon encountering a new class, the model compares its predicted embedding for the new visual input against the embeddings of unseen classes and classifies the input by selecting the closest embedding match in the semantic space. This method uses advanced text processing technologies to enhance the model’s ability to understand and categorize new objects.

Generalized Zero-Shot Learning (GZSL)

Generalized zero-shot learning (GZSL) is an extension of ZSL where the classification task is not restricted to unseen classes but includes both seen and unseen classes during testing. This represents a more realistic scenario where the model needs to recognize classes from a mixed set of seen and unseen categories.

GZSL poses additional challenges, primarily because the model tends to be biased towards classes seen during training. To address this, methods like hybrid models combined with balancing techniques are used to equalize the model’s performance across seen and unseen classes, ensuring that it remains fair in its predictions.

Multi-Modal Zero-Shot Learning

Multi-modal zero-shot learning incorporates information from multiple modalities like text, image, audio, or other sensor data to make predictions about unseen classes. This approach benefits from the diverse set of features available from different sources to improve the understanding and representation of both seen and unseen classes.

For example, in recognizing activities in videos, multi-modal ZSL might use video frames (visual), descriptions or subtitles (textual), and sounds (audio) to form a representation of an activity. The richness of multi-modal data can enhance the model’s inference capabilities, making it capable of handling complex, real-world tasks with multiple data types.

Zero-Shot Learning vs. Few-Shot Learning

Zero-shot learning (ZSL) and few-shot learning (FSL) are both techniques aimed at reducing the need for large labeled datasets, but they differ in their approaches and requirements:

Data Requirements

ZSL requires no labeled examples of the target classes during the training phase. Instead, it leverages auxiliary information such as attributes, semantic descriptions, or relationships with known classes to make inferences about unseen classes. This approach is particularly useful when acquiring labeled data is impractical, expensive, or time-consuming.

FSL operates with a small number of labeled examples for each target class. Typically, it involves using 1 to 5 samples per class. This small dataset is used to fine-tune a model that has been pre-trained on a larger, more diverse set of classes. The ability to generalize from this limited data set is crucial for FSL.

Training Approach

ZSL’s training process focuses on learning a mapping from visual or textual features to a shared set of attributes or semantic embeddings. For example, the model may learn to associate visual patterns with specific attributes (e.g., “striped” or “furry”) or map textual descriptions to a semantic space. During inference, the model uses these learned associations to recognize new classes based on their attributes or semantic descriptions.

FSL often uses meta-learning strategies, where the model is trained on a variety of tasks to learn how to adapt quickly to new tasks with minimal data. One popular approach is episodic training, where the model is exposed to many small tasks during training, each mimicking the few-shot scenario. Techniques like model-agnostic meta-learning (MAML), prototypical networks, and matching networks can improve the model’s ability to generalize from few examples.

Application Scenarios

ZSL is useful in scenarios where new classes emerge frequently, and obtaining labeled examples is not feasible. For example, ZSL can be used in wildlife conservation to identify new species of animals based on descriptions and attributes learned from known species. Another application is in the medical field, where ZSL can help diagnose rare diseases by leveraging descriptions of symptoms and characteristics from known diseases.

FSL is suitable for applications where a small number of labeled examples can be provided. It is often used in personalized applications, such as face recognition systems that need to adapt to new users with only a few photos, or voice recognition systems that need to learn new voices with minimal training data. FSL is also used in robotics, where robots learn new tasks or recognize new objects with limited demonstrations.

Zero-Shot Learning Limitations

ZSL has several potential drawbacks and limitations.

Domain Shift

Domain shift refers to the scenario where the distribution of the unseen class data differs significantly from the seen classes used during the training of a ZSL model. This discrepancy can hamper the model’s performance as the learned mappings and attributes may not apply effectively to the new domain.

Handling domain shift involves techniques like domain adaptation, where the model is updated to minimize the disparity between training and testing environments. However, this remains a challenging issue in ZSL, especially in dynamically changing settings where new classes can differ widely from the training set.

Hubness

Hubness is a phenomenon where certain data points, called hubs, become nearest neighbors to an unusually large number of points in high-dimensional spaces, including those used in ZSL semantic embeddings. This can lead to skewed distributions and biased class predictions, where a few hubs dominate the nearest-neighbor lists, reducing the diversity and accuracy of the outputs.

Addressing hubness in ZSL involves developing algorithms that reduce the incidence of such hubs or incorporate mechanisms to mitigate their effects, ensuring more reliable and balanced predictions across different classes.

Semantic Loss

Semantic loss occurs when the semantic descriptors or attributes used in ZSL do not fully capture the nuances needed to distinguish between different classes effectively. If the attributes are overly generic or inadequately detailed, the model may struggle to differentiate between closely related but distinct categories, leading to errors in classification.

Improving the specificity and detail of semantic descriptions, and using richer data sources for attribute extraction can help minimize semantic loss. Techniques that dynamically refine attributes based on feedback or additional data can also enhance the model’s understanding and adaptability, mitigating the impact of semantic loss in practical applications.

AI Testing & Validation with Kolena

Kolena is an AI/ML testing & validation platform that solves one of AI’s biggest problems: the lack of trust in model effectiveness. The use cases for AI are enormous, but AI lacks trust from both builders and the public. It is our responsibility to build that trust with full transparency and explainability of ML model performance, not just from a high-level aggregate ‘accuracy’ number, but from rigorous testing and evaluation at scenario levels.

With Kolena, machine learning engineers and data scientists can uncover hidden machine learning model behaviors, easily identify gaps in the test data coverage, and truly learn where and why a model is underperforming, all in minutes not weeks. Kolena’s AI / ML model testing and validation solution helps developers build safe, reliable, and fair systems by allowing companies to instantly stitch together razor-sharp test cases from their data sets, enabling them to scrutinize AI/ML models in the precise scenarios those models will be unleashed upon the real world. Kolena platform transforms the current nature of AI development from experimental into an engineering discipline that can be trusted and automated.