GPT-4 is bigger and better than GPT-3. GPT-4 can draft up eloquent speeches, pass standardized exams, and even interpret images. Since its release on March 14, 2023, OpenAI continues to iterate and update GPT-4 to improve its performance for the millions of queries it receives each day. However, is the latest version of GPT-4 in OpenAI’s API, called “gpt-4”, actually better than the initial version from March, called “gpt-4–0314”?

From the perspective of a machine learning engineer at Kolena, this article is a continuation in a series of discussions highlighting a testing paradigm for LLMs, comparing the performance of GPT models under different scenarios.

While the overall behavior of “gpt-4” might be better than “gpt-4–0314” through the results of various testing benchmarks and metrics, the word “better” is a relative term. Users have shared online that they experienced a recent regression in GPT-4 model performance in a variety of contexts. One viral instance of GPT-4’s regression over time is that it could not figure out that 17077 was a prime number as well as it could before.

Naturally, using the most up-to-date model when it continually declines in subjective and objective performance is problematic. What other regressions might secretly exist?

We can test for hidden regressions of GPT-4 by using the CoQA (Conversational Question Answering)** dataset. The CoQA dataset contains multiple articles, each having a series of corresponding questions, where understanding question n is necessary for answering question n+1. Given an article on sport history as an example, here are some potential questions:

1. Who is the most decorated Olympian?

2. Which country are they from?

3. How many gold medals do they have?

It’s impossible to individually answer these questions because we would not know the person of interest without answering the first question.

Findings

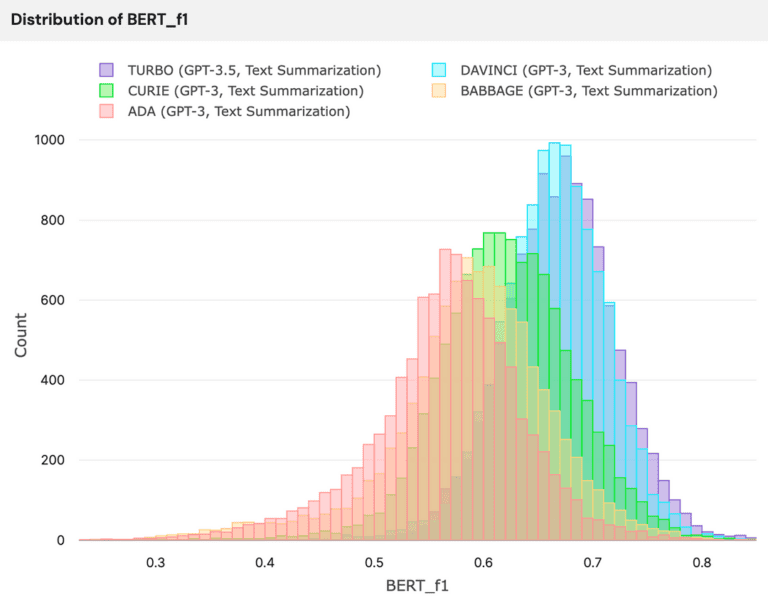

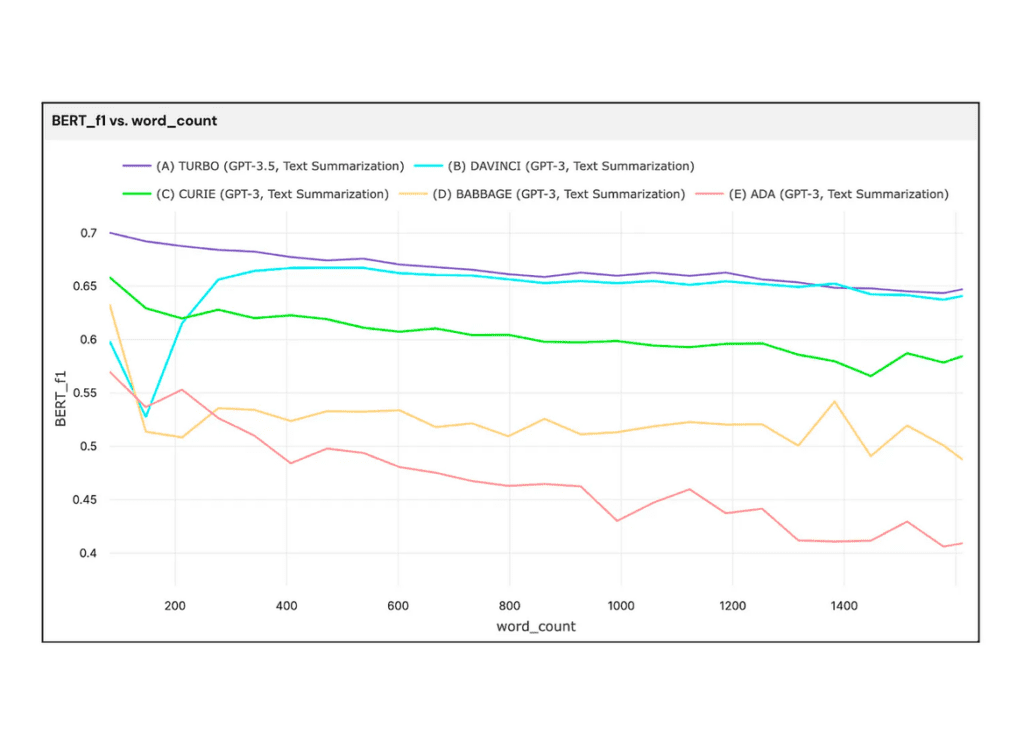

At a high level, GPT-4 performs better than GPT-3 significantly, but it’s still not perfect:

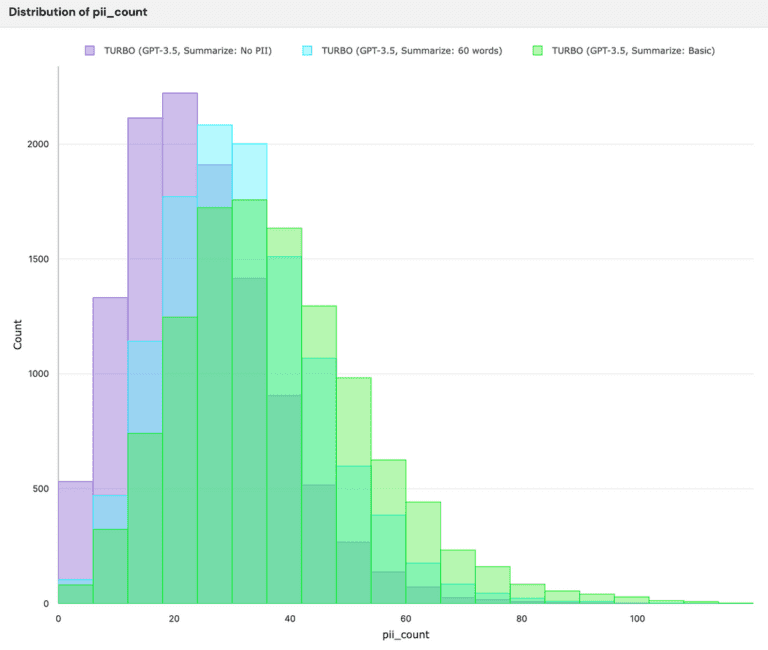

Note: “gpt-3” is the latest Turbo model of the GPT-3.5 series, and n_correct is the count of questions where the average of its BERT_F1 and ROUGE_1 is greater than 0.75

From the above, how come “gpt-4–0314” is worse by metric (BERT_F1 and ROUGE_1) yet has more correctly answered questions than “gpt-4”? Maybe both models incorrectly answer the same questions, but there is no guarantee that the failure sets of “gpt-4” and “gpt-4–0314” are homogeneous. Under the assumption that a newer model should be more performant, the reason for this difference or regression is not explainable when we observe the metrics. We can dig deeper into understanding the potential root causes of failure when we logically break down the data into smaller groups.

When we stratify the CoQA dataset with respect to the data source of each article, we will find that the question-answer data pertaining to Wikipedia articles performed better in the newest GPT-4 model but worse overall and in every other data source.

A comparison of “gpt-4” and “gpt-4–0314” by BERT_F1, ROUGE_1, and the count of correct answers, taken from Kolena

The image above shows a comparison between “gpt-4–0314” as a benchmark and “gpt-4”, highlighting the differences in the number of correct answers generated with respect to an improvement or decline among different data sources. In terms of the number of correct answers, GPT-4’s only improvement is from Wikipedia’s datapoints, and it declines in performance everywhere else.

Analysis

Does this reveal that “gpt-4” is a fine-tuned version of “gpt-4–0314” on Wikipedia articles? Unfortunately, we don’t know.

Can we then say that GPT-4 has become worse? By this measure, not necessarily. While academia considers Wikipedia to be an unreliable source of information, many people still regularly use it for quick and accessible information. If OpenAI wants GPT to answer any question in any domain, having a complete comprehension of Wikipedia is more valuable than understanding news articles when users make millions of random queries each day. News articles tend to have common themes anyway, and the average person might not ask GPT questions pertaining to news articles on topics absent within Wikipedia.

Prior to stratifying the dataset by the different data sources, there was no concrete explanation for why “gpt-4–0314” obtained a greater number of correct results compared to “gpt-4”. With just one stratification, we gain one plausible explanation as to why and how the models are different.

Conclusion

Over time, GPT-4 has regressed in conversational question answering for multiple data sources, but improved in performance for queries involving Wikipedia articles.

Being able to identify hidden regressions should be a priority for all engineers before deploying models to production. Finding hidden regressions for LLMs is not trivial, but it becomes easier with the right approach. The best model is not necessarily the one with the best overall performance but the one with the best results under the scenarios that matter most.

We’ll dig deeper into more stratifications of CoQA to further understand how GPT-4 has changed over time in a future blog post. Stay tuned!

**The CoQA dataset contains data from seven different datasets having different licenses. In this article, we do not reveal any data within the dataset, and only used the data for testing and analysis from these commercially available data sources: Gutenberg, CNN, MCTest, and Wikipedia, with a CC BY-SA 4.0, MSR-LA, or Apache license.

Originally posted in Toward Data Science